Will AI Kill the Pen Testing Star

Those who use the different AI support technology available, are seeing a trend, that the results, generally speaking, produced by the tools are getting better every month. There is still the old computing issue of garbage in, garbage out, but as we learn to better seed the bots and the bots learn what data we want and how we want it presented, there are some excellent solutions being delivered by the current AI tools.

What is the implication for the cybersecurity industry, specifically penetration testing?

To over simplify, the art of pen testing is to review the configuration and perform simulated attacks on an organisations digital and non digital assets, processes and procedures to determine points of weakness or vulnerability which could be exploited by a malicious actor. Assuming most organisations operate in similar fashions and or do not undertake significant change to their business systems and processes on a regular basis, this could be as simple as setting up an AI automated process to regularly probe and monitor those systems. A very simple reason why pen testers and cybersecurity experts will continue to be required is the social engineering or human component of the process. No AI is able to tailgate an employee into a restricted area. But for the sake of the thought experiment, let’s just explore the digital component of the penetration testing process.

A very interesting introduction to the computer ecosphere is the roll out of the first exascale computer, Frontier, last year. Frontier has the processing power to deliver one quintillion calculations per second. That is 10 to the power of 18, or a billion billions (18 zeros). Scientists estimate that the human brain can process an exaflop of data per second, again a billion billions, so now we have the first machine that can process data at the estimated speed of a human brain.

What does this mean?

AI is capable of following a list of processes, it has demonstrated the ability to reason and solve complex problems, we now have a machine that is capable of processing data at the same speed as a human brain and every machine connected to the internet has access to the complete knowledge of the human race.

Advocates for AI-driven penetration testing argue that AI can analyse vast networks and systems at speeds incomparable to human capabilities. AI algorithms can continuously scan for vulnerabilities, process large datasets, and identify complex security flaws much faster than a human can. This efficiency is especially critical in a landscape where new vulnerabilities and attack vectors emerge daily. By leveraging AI, organisations can ensure constant vigilance and faster response times, potentially stopping cyber threats before they cause significant damage.

Another point in favour of AI is its scalability. AI systems can be deployed across multiple networks and environments simultaneously, maintaining consistent quality in vulnerability assessment. This scalability is particularly beneficial for large organisations with extensive IT infrastructure. Human penetration testers, while highly skilled, generally can only focus on one target at a time, making AI a more scalable option for widespread security coverage.

From a financial perspective, integrating AI into penetration testing could be more cost-effective in the long run. Initial investments in AI technology can be high, the operational costs decrease over time. AI systems do not require the same level of continuous training, benefits, or salaries as human employees, potentially offering a higher return on investment through reduced labour costs and enhanced security.

Opponents of the idea that AI will render human penetration testers obsolete highlight the inherent creativity and adaptability of humans. Penetration testing is not merely a technical task; it often requires thinking like an attacker, a feat that involves creativity, intuition, and an understanding of human psychology. These aspects allow human testers to anticipate and simulate sophisticated attack strategies that AI might not yet be capable of conceptualising, especially when dealing with novel or complex scenarios.

Cybersecurity is fraught with ethical dilemmas and decisions that affect real people and organisations. Human penetration testers can navigate these complexities with a nuanced understanding of ethics, legalities, and the potential consequences of their actions. While AI can follow pre-programmed ethical guidelines, the subtleties of ethical decision-making in real-world scenarios often require a human touch, particularly when assessing the risk and impact of potential vulnerabilities.

Cybersecurity is a constantly evolving field, with new threats and techniques developing regularly. While AI systems can learn from new data, their learning is based on past information and existing patterns. Human penetration testers can anticipate and adapt to new threats more dynamically, using their experience, knowledge of current events, and understanding of human behaviour. This ability to think outside the box and apply novel approaches to emerging threats is a critical component of effective penetration testing.

The debate on whether AI will make the role of a human IT penetration testing obsolete is far from settled. AI offers significant advantages in terms of efficiency, scalability, and cost-effectiveness, the unique strengths of human testers—creativity, ethical judgement, and adaptability—remain crucial in the complex and ever-changing landscape of cybersecurity.

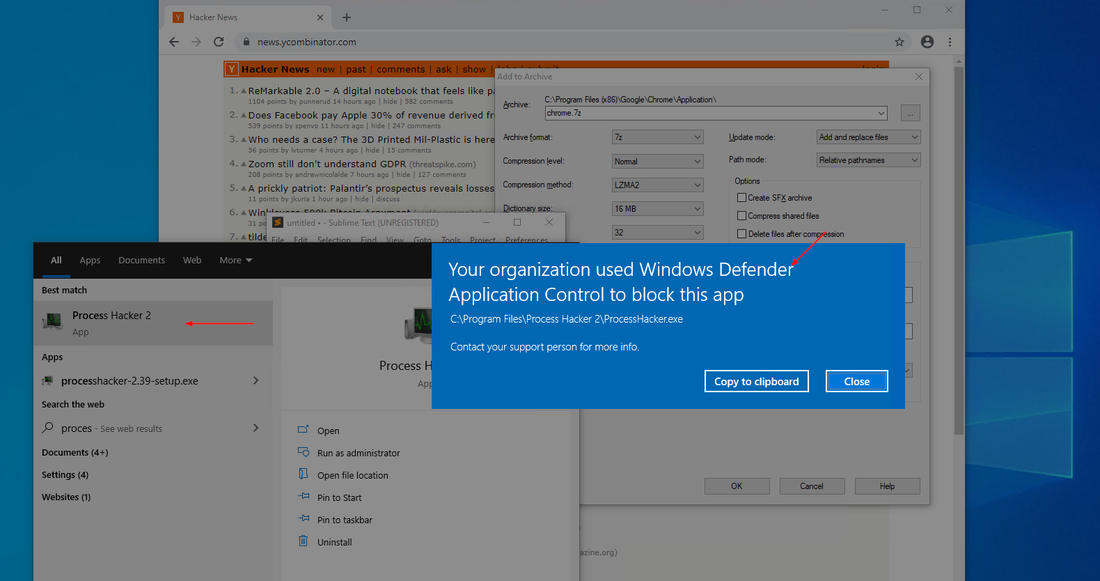

A critical point to consider when discussing human vs AI driven security, and the subject of many science fiction plotlines, is that to use AI, a software algorithm, as the primary or potentially only defence of an organisation’s digital assets against a digital attack could be considered akin to using foxes to protect the hen house. Leveraging a reputable, experienced and ethical cybersecurity expert to protect and monitor business systems is much more difficult to “hack” than a software algorithm. Bad actors excel in breaking into the exact systems used by AI to monitor and protect important data. There is the argument that humans are also corruptible, but a hacker would find it much easier to corrupt digital systems like an AI penetration testing algorithm than they would a reputable pen testing expert.

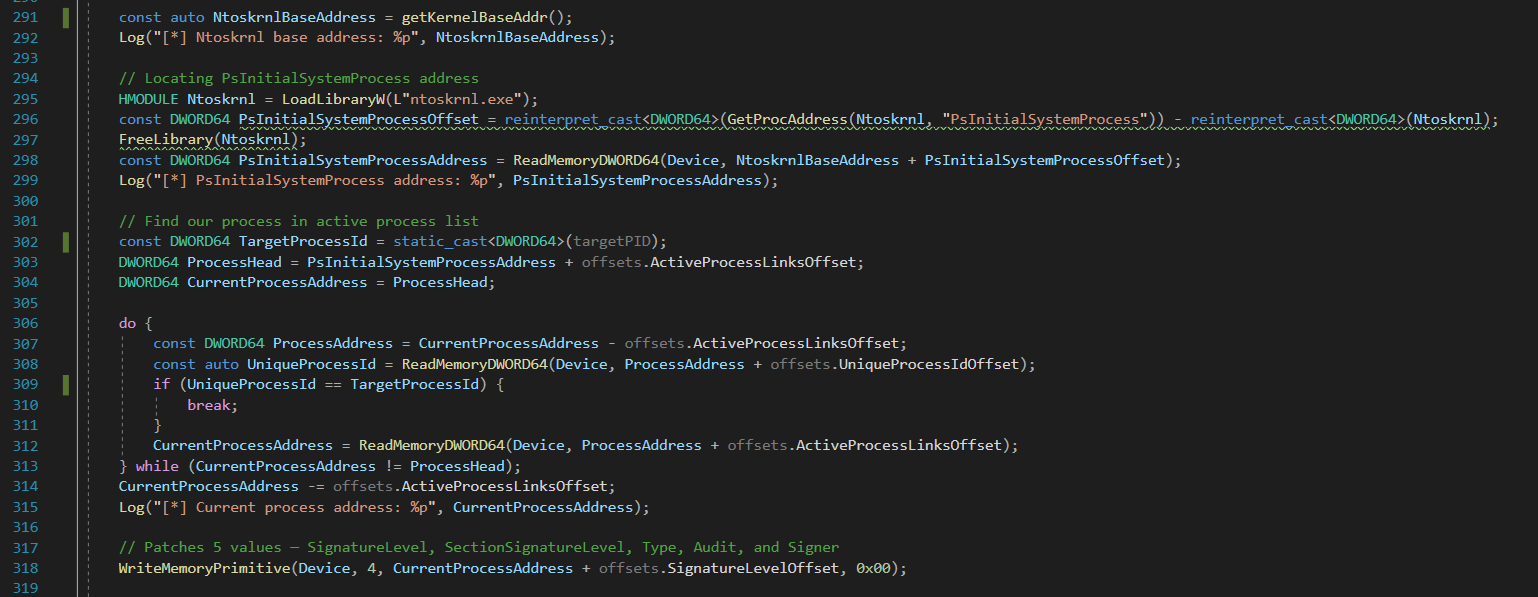

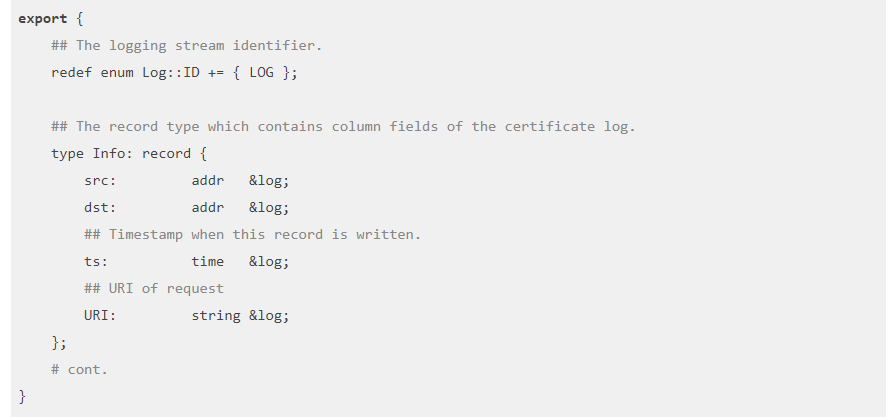

We have written a number of blogs on the help and support of AI in the penetration testing process. We have explored how to use different free AI bots available, how to shape pen testing queries and code creation. We have looked at how AI is shaping the industry, which is why it was worth exploring the possibility or timeframe in which AI could replace humans as the primary cybersecurity and penetration testing protection.

The fact clearly points to the ongoing and near future need for humans as cybersecurity and penetration testing experts. AI will continue to improve its performance, computing power will continue to increase, humans and AI will develop new techniques to protect the AI algorithm, and malicious actors will continue to push the security envelope. A combined AI and human approach to protection is the best solution and many cybersecurity firms have leveraged the capabilities of algorithms to test for many years. Without a significant leap in AI security and self-protection, organisations who choose not to leverage human security experts to protect their organisation are not protecting their best interests.