How AI is Impacting Cybersecurity and Penetration Testing

In the era of rapid technological advancement, the proliferation of AI platforms is revolutionising the way we interact with digital information, enhancing productivity, and streamlining decision-making processes. However, this same technology, designed to simplify and enrich our lives, is being weaponized by hackers and bad actors. These individuals exploit the capabilities of AI to orchestrate more sophisticated malware attacks and social engineering hacks on businesses, posing significant cybersecurity threats.

The ability of AI platforms to process and generate human-like text is a double-edged sword. On one side, they offer immense potential for automating tasks, providing customer support, generating content, and more. On the flip side, they present a formidable tool for malicious actors. Hackers leverage these platforms to craft more convincing phishing emails, generate malicious code, automate hacking processes, and tailor social engineering tactics with unprecedented precision.

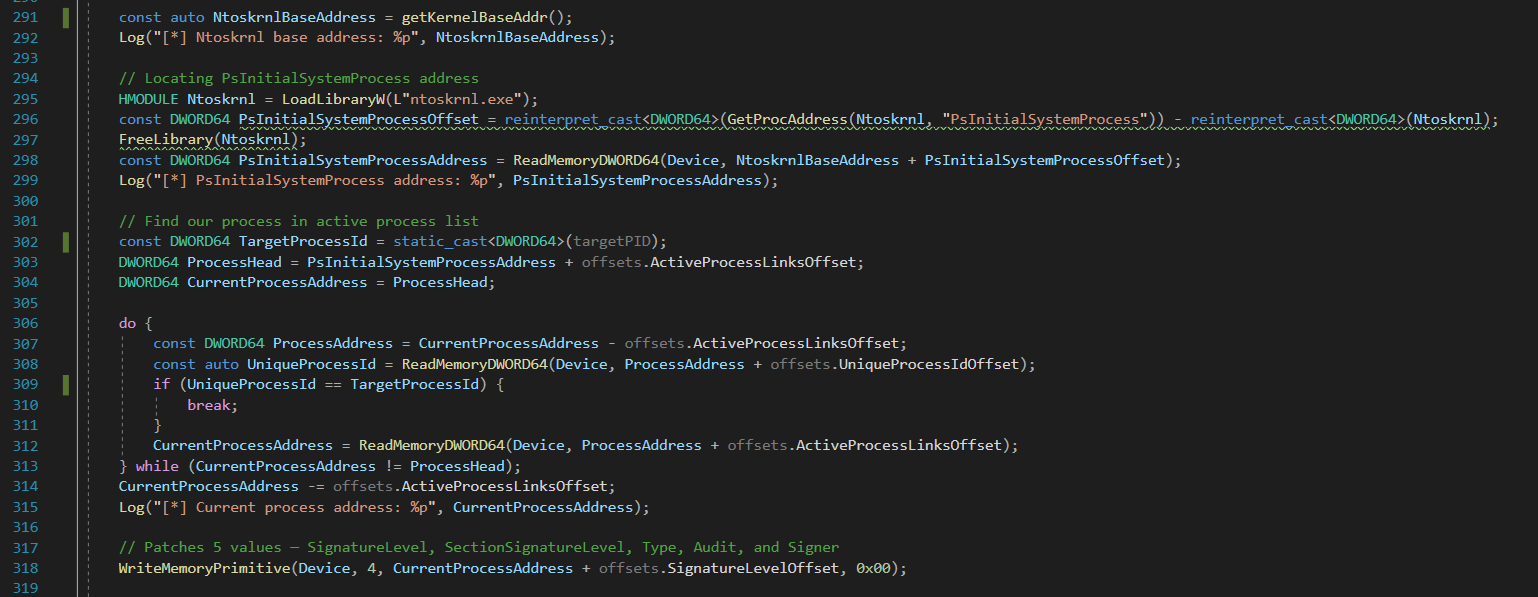

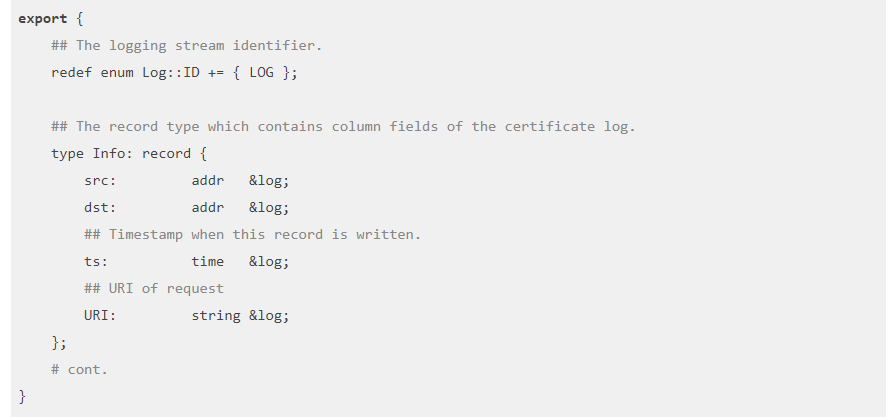

Specifically, malware development has become more sophisticated with the integration of AI. Hackers use platforms like ChatGPT to write and optimise malicious code, making it more efficient and harder to detect. AI can also be used to analyse vast datasets of known malware to create variants that can bypass traditional antivirus and malware detection mechanisms. This not only speeds up the malware development process but also makes the malware more adaptable and resilient. The speed of adaptation and evolution in malware is greatly reducing the time of the security testing and review cycle. A secured network or site, following best practices could theoretically be vulnerable the following day or month, without engaging in any poor practices.

Hackers can utilise open-source AI frameworks and chatbot development tools to create their own malicious chatbots. These frameworks, which are intended for legitimate uses like customer service and automation, can be repurposed to conduct social engineering attacks. Examples include Rasa, Botpress, and Microsoft Bot Framework. By customising these platforms, hackers can develop chatbots that mimic legitimate entities to phish for personal information or distribute malware.

Platforms like OpenAI’s ChatGPT, Google’s Bard, and others based on large language models (LLMs) have been used creatively by hackers to generate convincing phishing emails, create malicious code snippets, or automate the creation of content for social engineering campaigns. Although these platforms have implemented safeguards to prevent misuse, determined individuals can find ways to bypass these measures or use the output to inform more sophisticated attacks. A strategy we have explored in earlier blogs.

AI platforms specialised in generating or optimising code, such as GitHub Copilot, can potentially be exploited to write or refine malicious scripts and malware. While these tools are designed to improve productivity for developers, their ability to generate complex code based on simple prompts could be leveraged by hackers with enough technical know-how to guide the AI towards producing harmful software. We have explored the processes and capabilities of some platforms in our earlier blog posts.

Hackers with sufficient resources and expertise may develop custom AI models tailored for specific malicious purposes. These models can be trained on datasets of phishing emails, malicious code, or social engineering tactics to become highly effective at generating attacks that are difficult for traditional security tools to detect.

Platforms that enable the creation of social media bots can be exploited to spread misinformation or malicious links. These bots, which can automate posts and messages on platforms like Twitter, Facebook, and Instagram, can be used to create the illusion of legitimacy or urgency, tricking users into clicking on harmful links or divulging sensitive information.

The ability of AI to generate realistic and convincing text, images, and videos opens the door to a new form of attack: deep fakes. AI that specialises in creating these forgeries can be used in sophisticated phishing schemes and disinformation campaigns by creating counterfeit content that impersonates trusted individuals or brands, facilitating scams, spreading misinformation, or tarnishing reputations. While not strictly chatbots, these technologies represent an advanced form of AI that can be used in conjunction with chatbots or other social engineering tactics to deceive victims. This capability significantly enhances the toolkit available to cybercriminals, making it harder for individuals and businesses to discern between genuine and malicious communications.

Social engineering attacks, which rely on human interaction and manipulation to gain unauthorised access to confidential information, have also become more sophisticated thanks to AI. Chatbots powered by platforms similar to ChatGPT can be programmed to impersonate humans in convincing ways, engaging in interactions that can trick individuals into revealing sensitive information or performing actions that compromise security. These AI-enhanced social engineering tactics target a wide range of victims, from low-level employees to high-ranking executives, making no one immune to their reach. Regular education and awareness training to help identify and avoid security pitfalls is now a must for all businesses with any sort of public footprint.

AI platforms enable the automation of attacks, allowing hackers to launch widespread phishing campaigns with little effort. By leveraging these technologies, bad actors generate personalised phishing emails in bulk, targeting thousands of individuals across multiple organisations simultaneously. This scalability not only increases the potential success rate of these attacks but also poses a significant challenge for organisations trying to defend against them. The speed at which new or “improved” content is generated and dispersed makes it very difficult to be reactive, only leveraging security bulletins or lists of known campaigns or vulnerabilities. Organisations must be proactive in the way in which they approach cyber security.

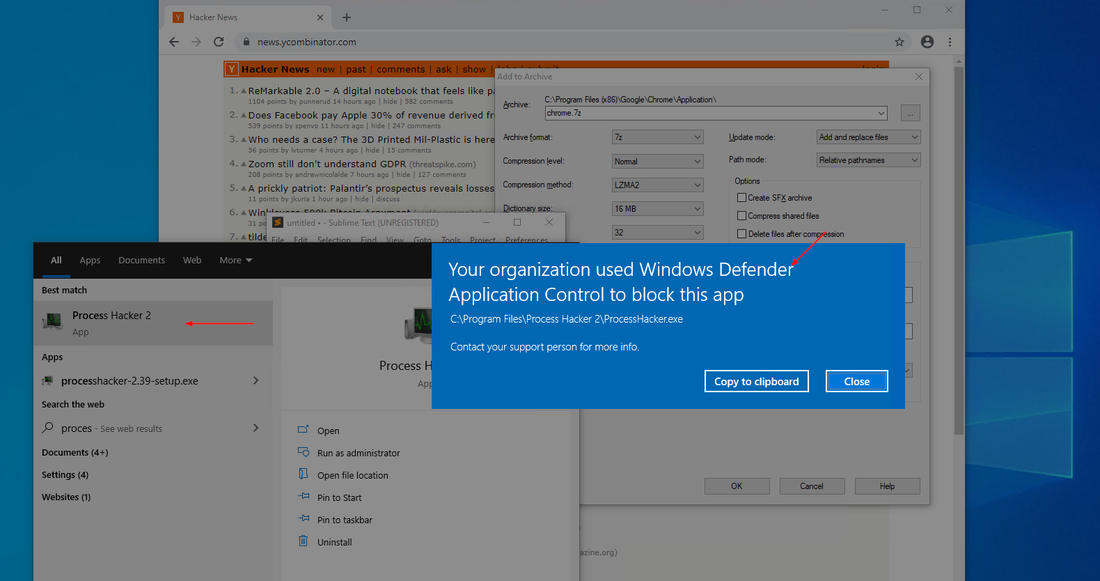

The exploitation of AI by hackers presents new challenges for security and network teams. Without the support of cyber security professionals, who are required to be across the latest trends, styles and attack mechanisms, it is very difficult to keep pace with the variability of how hackers try to penetrate a business. Traditional defence mechanisms, which rely on detecting known patterns of malicious activity, may not be effective against the novel and evolving tactics powered by AI. Furthermore, the ethical and regulatory frameworks surrounding the use of AI in cybersecurity are still in development, complicating the efforts to mitigate these risks. The role of the cybersecurity professional or specialist has evolved with the changes to the industry and requires a great deal more research and monitoring of industry trends. Organisations must also evolve to increase the frequency to which they undertake security measures like penetration testing and team training to keep pace with the speed of the industry evolution.

To counteract these threats, businesses and cybersecurity professionals must adopt a proactive and dynamic approach to security. This includes investing in advanced threat detection and response systems that can adapt to the evolving tactics of AI-powered attacks. Education and awareness training for employees are also crucial to combat social engineering attacks. Moreover, collaboration between AI developers, cybersecurity experts, and regulatory bodies is essential to establish ethical guidelines and security standards for AI technologies.

As AI technology continues to evolve, the arms race between cybersecurity professionals and hackers will intensify. The development of AI-powered security solutions offers hope for defending against sophisticated attacks. However, the accessibility of AI platforms also means that bad actors will continue to find innovative ways to exploit these technologies. The future of cybersecurity will depend on our ability to leverage AI for defence as effectively as hackers use it for offence.

The leveraging of AI platforms by hackers and bad actors underscores a critical paradox of our digital age: the very tools designed to propel our society forward can also be used to undermine it. Our reliance on technology to operate our businesses effectively is paralleled by the growth of the sophistication of cyber threats, presenting an ongoing challenge in cybersecurity. Understanding the capabilities of AI and the ways in which it can be exploited is paramount for cybersecurity professionals, with organisations needing to leverage this knowledge and capabilities to better prepare themselves against the ever-evolving landscape of cyber threats. The battle against AI-powered cyber threats is complex and requires constant vigilance, innovation, and collaboration to ensure the security and integrity of the digital world.