Kubernetes Security Part 1 – Creating a test Kubernetes Cluster with kubeadm

As enterprise moves towards Cloud Computing, large technologies and platforms, such as AWS, contain complex infrastructure that is susceptible to complex security concerns, and Kubernetes clusters are no exception. Red Cursor has started testing applications that are running as containers within these clusters and having access to a running, reproducible test environment is becoming vital for security research purposes. This blog post details creating a test Kubernetes cluster with Kubeadm consisting of one controlplane node and two worker nodes that are semi-automated using vagrant.

When creating this cluster we refer to the Kubeadm and Arch Linux wiki for documentation and find that there are some interesting caveats for installing Kubeadm, namely:

Specifically, it mentions some caveats that are important to be aware of, namely:

- Swap space has to be disabled for the Kubelet service to start.

- The Kubelet service needs to be manually enabled and started

- The Kubeadm tool requires a back-end container system such as Docker to be enabled and running.

Creating Shell Scripts

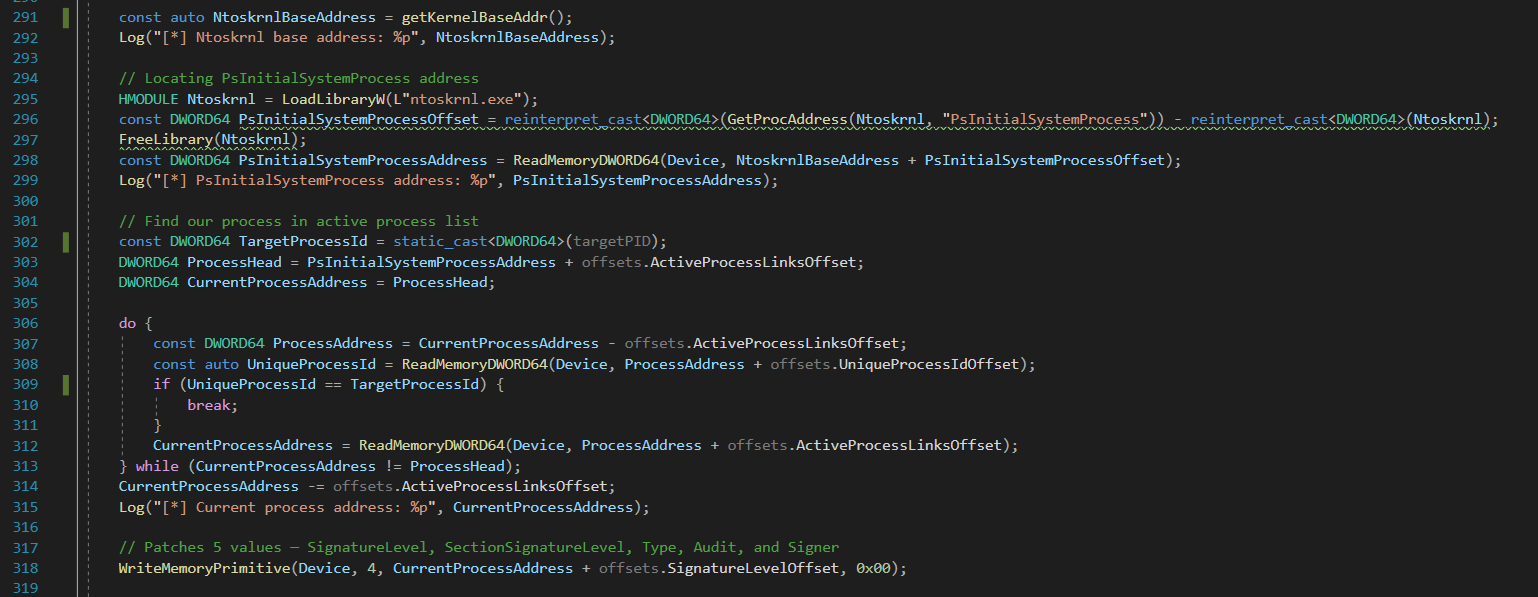

The following shell script was used to meet these requirements (with a one-line change to initialize Kubeadm on the controlplane node):

sudo pacman -Syy

printf "y\ny\ny\n" | sudo pacman -S iptables-nft kubelet kubeadm docker vim

sudo mkdir /etc/docker

sudo printf '{\n"storage-driver": "overlay2"\n}\n' | sudo tee /etc/docker/daemon.json

sudo swapoff -a

sudo systemctl enable kubelet.service

sudo systemctl enable docker.service

sudo systemctl start docker.serviceThis script installs the necessary packages for the machines, sets the storage-driver to overlay2 as btrfs is not supported by Kubeadm, disables swap, enables and starts the docker service, and finally enables the Kubelet service. For our control plane we perform much of the same logic, but we additionally install Kubectl and run Kubeadm to initialize our cluster.

sudo pacman -Syy

printf "y\ny\ny\n" | sudo pacman -S kubelet kubeadm kubectl docker vim

sudo mkdir /etc/docker

sudo printf '{\n"storage-driver": "overlay2"\n}\n' | sudo tee /etc/docker/daemon.json

sudo swapoff -a

sudo systemctl enable kubelet.service

sudo systemctl enable docker.service

sudo systemctl start docker.service

sudo kubeadm init --ignore-preflight-errors=all --apiserver-advertise-address=192.168.56.2

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/configUsing Vagrant to deploy machines to our test Kubernetes Cluster

When creating a test Kubernetes Cluster we require virtual machines to act as each of the nodes. Vagrant is used to create and mange the virtual machines so they can quickly be reset and recreated. For the virtual machine OS, I decided to use Arch Linux as, in normal installations, I find it has a smaller footprint then other operating systems such as Ubuntu as well as supporting installing the needed tools out of the default repositories.

The machines can be created by using the following Vagrantfile:

# -*- mode: ruby -*-

# vi:set ft=ruby sw=2 ts=2 sts=2:

# Define the number of master and worker nodes

NUM_MASTER_NODE = 1

NUM_WORKER_NODE = 2

IP_NW = "192.168.56."

MASTER_IP_START = 1

NODE_IP_START = 2

Vagrant.configure("2") do |config|

config.vm.box = "archlinux/archlinux"

config.vm.box_check_update = false

# Provision Master Nodes

(1..NUM_MASTER_NODE).each do |i|

config.vm.define "kubemaster" do |node|

node.vm.provider "virtualbox" do |vb|

vb.name = "kubemaster"

vb.memory = 2048

vb.cpus = 2

end

node.vm.hostname = "kubemaster"

node.vm.network :private_network, ip: IP_NW + "#{MASTER_IP_START + i}"

node.vm.network "forwarded_port", guest: 22, host: "#{2710 + i}"

node.vm.provision "setup-kubemaster", :type => "shell", :path => "scripts/install-controlplane.sh" do |s|

s.args = []

end

end

end

# Provision Worker Nodes

(1..NUM_WORKER_NODE).each do |i|

config.vm.define "kubenode0#{i}" do |node|

node.vm.provider "virtualbox" do |vb|

vb.name = "kubenode0#{i}"

vb.memory = 2048

vb.cpus = 2

end

node.vm.hostname = "kubenode0#{i}"

node.vm.network :private_network, ip: IP_NW + "#{NODE_IP_START + i}"

node.vm.network "forwarded_port", guest: 22, host: "#{2720 + i}"

node.vm.provision "setup-kubemaster", :type => "shell", :path => "scripts/install-node.sh" do |s|

s.args = []

end

end

end

endNow we can run vagrant up and watch as our boxes are deployed and configured:

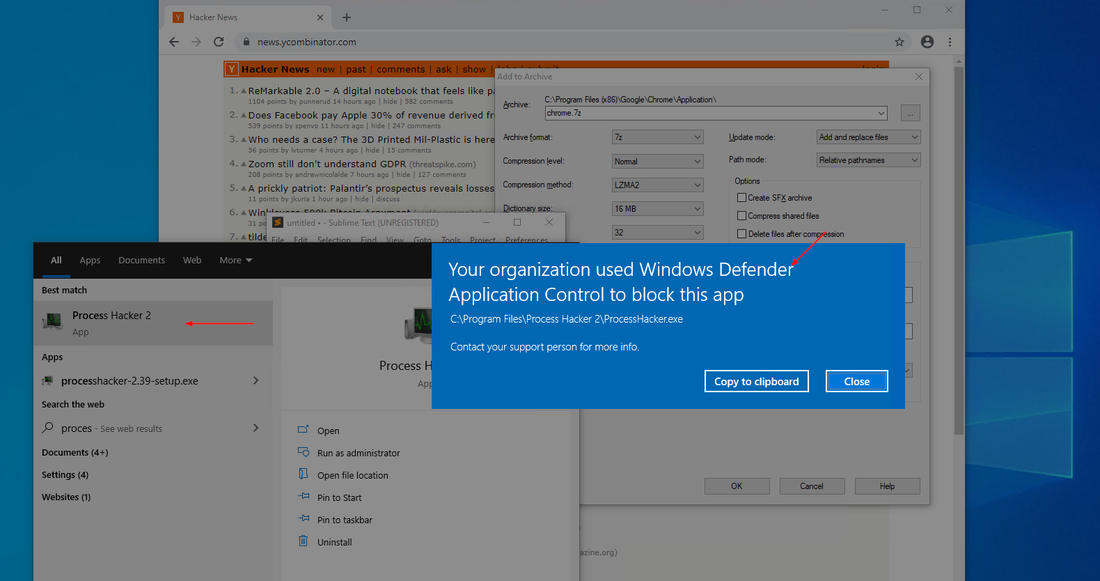

vagrant upDuring this boot up process, an important command will show up in the vagrant logs of our kubemaster machine:

Write the command down as it is used to join each Kubernetes node to the test cluster.

Configuring our test Kubernetes Cluster

Once all of our machines are online, we can use vagrant ssh nodemaster to access our controlplane node and start issuing commands. We can firstly check that our kubectl is correctly configured and that our controlplane node exists within the cluster:

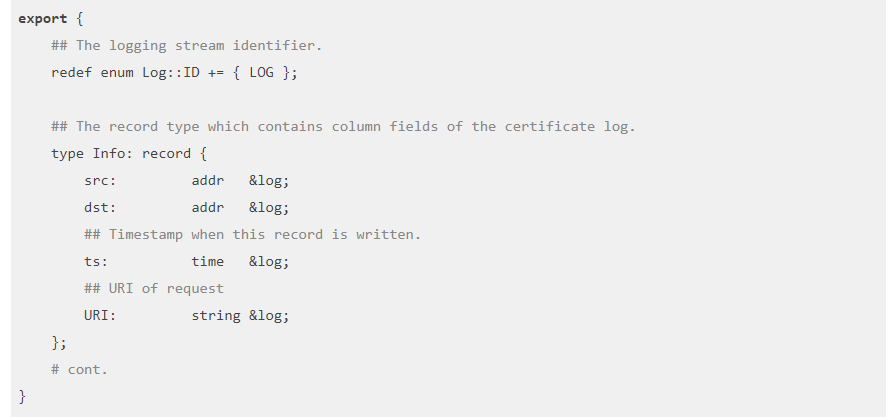

Firstly we deploy a networking solution such as Flannel. To deploy flannel run the command as shown on their GitHub:

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.ymlWe can now register each node to our cluster by using vagrant ssh kubenode01 and vagrant ssh kubenode02 and running our previously saved Kubeadm join command:

kubeadm joinNow we can check the status of our nodes:

We are now ready to start testing using our new test Kubernetes cluster.